[embedded content] In this Thinking Out Loud interview with Katrien Devolder, Philosophy Professor Peter Railton presents his take on how to understand, and interact with, AI. He talks about how AI can have moral obligations towards us, humans, and towards each other, and why we, humans, have moral obligations towards AI agents. He also stresses that the best way to tackle certain world problems, including the dangers of AI itself, is to form a strong community consisting of biological AND AI agents.

Similar Posts

The Space Between: Post 1

The type of empathy these skeptics tend to attack is affective empathy, namely feeling an emotion consonant with that of another person because that other person is feeling it (or because they are in the situation they are in)….

The ethics of age-selective restrictions for COVID-19 control

Savulescu and Cameron previously proposed that selective liberty restrictions are more likely to be justified when those who have their liberty restricted are those who benefit most from the measures. In the case of COVID-19, with the marked difference in…

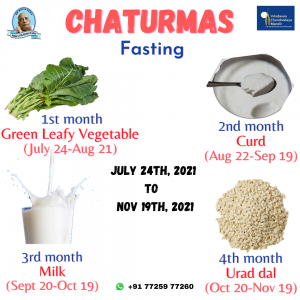

Chaturmas 2021

The Chaturmas period begins in the month of Ashadha (June-July) and ends in the month of Kartika (October-November). Chaturmas means “four months”, which is the duration that Vishnu sleeps. This year it falls from 24th July 2021 to 19th Nov…

How to build a website with WordPress and what are the best plugins to use

How to build a website with WordPress and what are the best plugins to use Building a website with WordPress is an excellent choice due to its versatility, ease of use, and a vast array of plugins that enhance functionality….

Readers reply: could rocks be conscious? Why are some things conscious and some not?

It is very difficult to define consciousness. Sam Harris (quoting the philosopher Thomas Nagel) says that a creature is conscious if there is “something that it is like” to be this creature. It doesn’t seem that rocks have the complexity…

Press release: Battersbee final* appeal rejected

How common are these disputes?Tragically, for a small number of children who become critically ill each year, medicine reaches its limits. For children like Archie, doctors cannot make them better, and advanced medical techniques and technologies may end up doing…