Nineteenth-century physiologist Johannes Müller, for example, argued for vitalism and defended the notion that the activity of the nervous system would never be reducible to physical facts. In Chapter 2 of my book, I describe Müller’s influential views and detail how we came to understand that, in fact, neural activity can be understood in physical terms, specifically as a form of electricity. Knowing what I know now—in Müller’s future—vitalism is foreign and inconceivable to me. I have entire wings of my mental model of the brain dedicated to how neuron’s use electricity to signal and how these signals carry information and how this information produces thoughts, emotions, and actions. Vitalism crashes against the very foundation of my knowledge. To try to internalize it creates confusion and discordance.

Experiencing firsthand the dissonance of holding present and past theories in my mind has altered my own perspective on my science. While I still need to work within the model I have—to publish papers testing the hypotheses it bears out and even to teach it to others—I hold it with a looser grip. I am more open to fundamental re-tellings of the facts. I more clearly see the wide gaps between our data points and I feel the uncertain ground our theories stand on. Simply put, I am less invested in my own beliefs.

It may seem silly (unless you are writing a book on it) to try to make Müller’s argument make sense, given that we know quite certainly that it is false. But in our relationship to the past, there is a clear parallel to the future. Insofar as a future neuroscientist’s mental model will not be hospitable to many of my own current beliefs, I would also find theirs foreign. A future (more accurate) understanding of the brain would chafe against my own understanding much the way Müller’s does. The future is as alien as the past.

While many scientists likely know this on some level, I don’t know that they feel it. That is—unless they’ve had the pleasure or pain of living through a paradigm shift in their own area of research—they’ve not experienced what it is like to be so completely off. They haven’t felt the shift of firmly believing things were one way then being told they are another.

Through history, however, we can experience such things. I would argue this is done best not by simply acknowledging a field has changed. Rather, a scientist should try to truly understand the pre-change mindset. Engaging in this exercise was one of the unexpected challenges I encountered as a scientist writing a book on history.

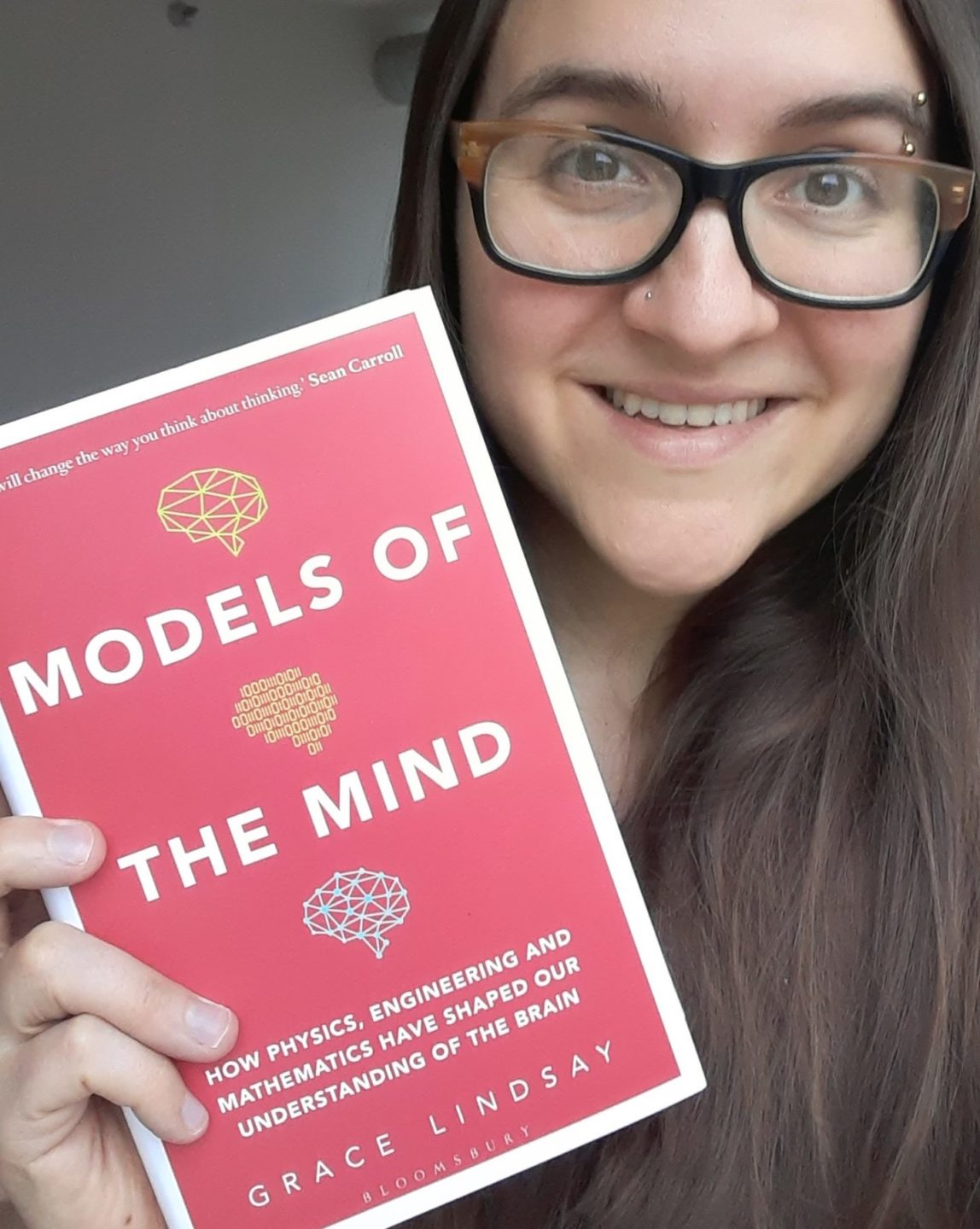

As the publication date neared, it was time to settle on a title for this book of stories. It was after much critique and many emails worth of options that my editor and I finally settled on Models of the Mind: How physics, engineering and mathematics have shaped our understanding of the brain. “How”…“have shaped”. If it wasn’t clear to me by then, the subtitle made it undeniable: this is a book about how things happened. It’s a book about history.

On the flip side, this means that scientists right now are working hard to build a worldview that is merely a mirage. They are, unwittingly, putting more and more bricks into a wall that will turn out to be a facade. This is, of course, inevitable. Science only exists on the edge of knowledge. It is core to the scientific endeavor that there is no map, no answers in the back of the book, no power—aside from patience—to determine if we’ve gotten it right.

In this way, a historical perspective can inoculate a scientist against the shock of future paradigm shifts. It can make them more open to receiving wildly new perspectives. And in so doing, it may even make those paradigm shifts arrive sooner. So while I am still not a historian, I believe I’ve become a better scientist for having immersed myself in history.

In science, this is most stark. We know that our current theories of the brain (and so many other things) are wrong. Yet we have a very limited sense of how they need to change. Under the assumption of an inevitable march of progress in science, the best scientists are thus prophets. They see—and can show—now what would otherwise only be known decades down the line.

There is some solace in the fact that correct theories are, given time, easier to understand. Their alignment with reality makes them far more palatable than the false theories of the past. Yet getting to that understanding still requires a dismantling of current beliefs, a potentially painful process if those beliefs are strongly-held. As a child I was raised Catholic and I believed passionately in the reality that religion provided well into my teens. While my current atheism feels much more comfortable, getting here was not trivial. Even when the end point is a good, solid theory, overcoming our sub-optimal worldviews remains a high barrier. It is one that requires a large “cognitive activation energy” to surmount, and this can prevent progress.

I knew I wanted the book be more than just be a laundry list of theories and equations with no connective thread or relevance to the reader. I also knew I didn’t want it to be over-sensationalized, creating a reflection of the science not recognizable to researchers doing the work. To tread the line and make an engaging book that did not overstate the science, I decided to focus on stories: stories of ideas about the brain, and the people who carried them, as these ideas morphed and changed over decades through contact with other, more quantitative, fields.

As an amateur historian, however, I was faced with a predicament. The findings I was reading about were wrong, demonstrably so. And yet, in order to tell the stories I wanted to tell I needed to grasp these untruths; I needed to warp my own mindset to match that of past scientists, flaws and all.

I am not a historian. This thought did not occur to me when I set out three years ago to write a book about my field of computational neuroscience, but it has occurred to me many times since.

History isn’t just a list of things that occurred in the past (indeed, the book contains many recent studies as well). Rather, the gift of history is that it tells how things were once different. That the sun rose in the East over ancient Rome and set in the West during the Battle of Gettysburg are true facts of the past. Yet you won’t find them much discussed amongst historians. To make a story out of historical events there must dynamism and change. There must be a conflict across time: a difference between the way things were and the way they are now. By extension, there must also be a way things will be. Because we are always living in tomorrow’s history, we are surrounded by facts that will later be different. Some we can readily grasp: in time there will be new technologies, a change in fashions, a turnover in world leaders. Other changes are harder to foresee, even if we know they are likely to occur.

As a neuroscientist, I’ve worked hard to construct a delicate yet elaborate tower in my mind; this is my mental model of how I believe the brain “works”. I don’t file away in this tower every detail of every new finding with the author, journal, and year tagged. Instead I aim to fit the broad conclusions of each new study into this construction. Some studies require the addition of whole new rooms, others new hallways to connect past knowledge, and still others serve as mere wall decoration, adding detail to what was already largely there. Importantly though, these different parts and pieces fit together—not always perfectly, but enough to function.