Databasing tools such as The Cognitive Atlas and Neurosynth collect and systematize results from fMRI studies. The databases collect activation results from across studies, and index them by the cognitive faculties they are supposed to measure. So, a given study of “memory,” indicated by a particular memory task, is reported in the database along with the activation values from the brain while subjects performed that task. Analyticaltools are then used to look for patterns across studies. So, what are the activation levels in different parts of the brain that most strongly correlate with (e.g.) memory across the full range of studies? A range of analytical techniques, including topic modeling and Latent Dirichlet Allocation, are used to establish these correlations. Details aside, each method attempts to show how cognitive functions are differentially distributed across brain activity. I hence refer to these as “databasing and brain mapping” projects.

Dan Burnston

In the last part of the chapter, I flesh out the normative upshot of the proposal for neuroscientific investigation and for brain mapping projects. Ultimately, the upshot in each case is that we need to focus more on distinctions in type of task. The information processing that is needed to assess task context, and manipulate information for the specific task at hand, is what explains behavior, and therefore more attention is needed in demarcating and taxonomizing tasks, both in terms of their own structure and in comparison to other tasks. I thus propose that “task ontology,” rather than cognitive ontology, is of primary import for progress in cognitive neuroscience.

The standard framework is realist about cognitive functions, but does not assume that we know all of them, or that our intuitive list is correct. What it does assume is that cognitive functions are distinct kinds, that are instantiated in specific neural activity, and that there is a fact of the matter about which is being instantiated at any given time. Unfortunately, these assumptions are challenged by much of the recent data about the functions of parts of the brain. In particular, individual parts of the brain are multifunctional, and activity corresponding to particular purported faculties is distributed. What this means is that there are no discrete patterns of instantiation corresponding to cognitive functions. Any individual area can be involved in a variety of functions, and any function’s pattern of instantiation will overlap with that of others. Hence, the kind of discrete instantiation relations required by the standard framework fail to obtain.

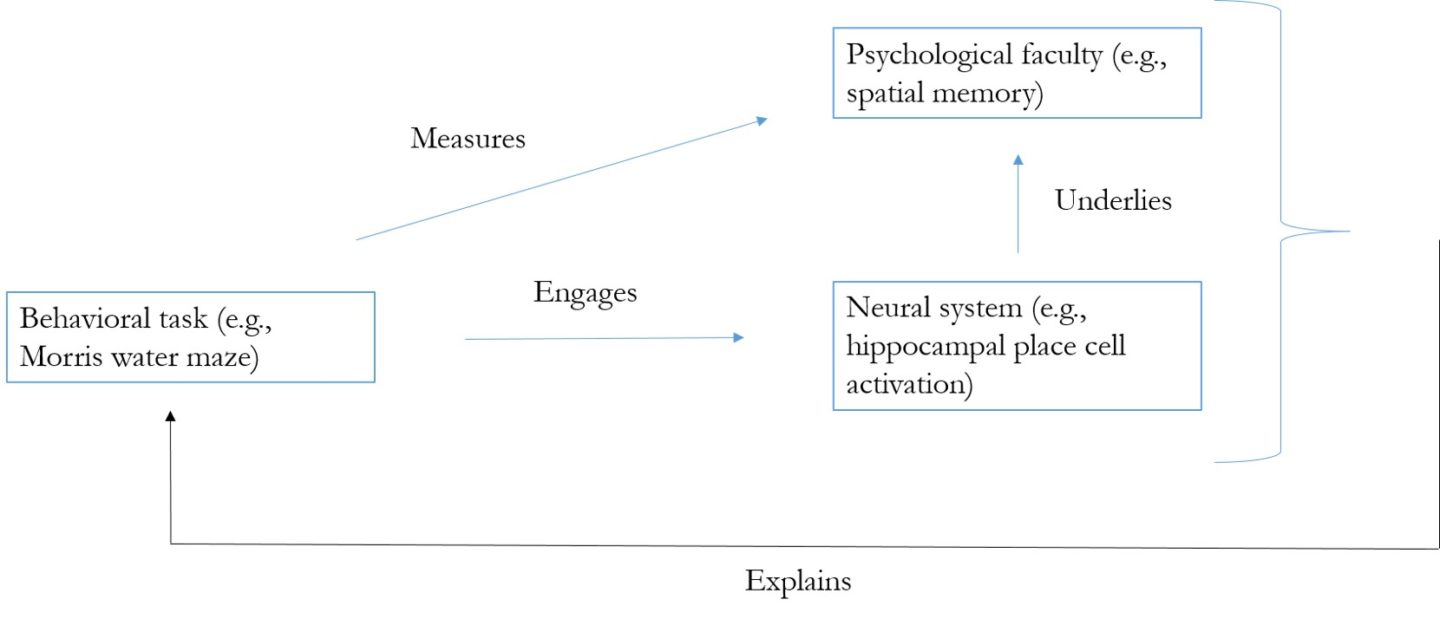

The question of cognitive ontology is usually assessed relative to what I call the standard explanatory framework of cognitive neuroscience. On this view, cognitive categories such as those listed above are (i) real, natural kinds, that (ii) explain behavior, and (iii) are instantiated in neural activity. When one has a subject perform a cognitive task, the idea is that this measures the cognitive function of interest, and engages the brain areas that instantiate the function. The instantiation of the function in the brain then explains performance on the task. Figure 1 below shows this framework schematically.

Tulane Brain Institute

Further, there are difficulties with the proposal to revise our cognitive ontology. Databasing and brain mapping projects take our extant cognitive ontology as a given in the analysis. That is, they start from the cognitive functions that researchers take themselves to be investigating. So, given the failure of discrete instantiation relations, the projects themselves offer no resources for amending our ontology in any specific way.

Tools come in a variety of forms. Many experimental tools are ways of intervening upon and measuring the system of interest. Other tools, however, are analytical tools – tools for organizing and scrutinizing data taken from measurement. A major methodological advance in recent cognitive neuroscience is to employ tools of databasing and machine learning to draw conclusions about data from a wide range of brain imaging experiments. The hope is that these tools will allow neuroscientists to draw conclusions about the large-scale functional organization of the brain.

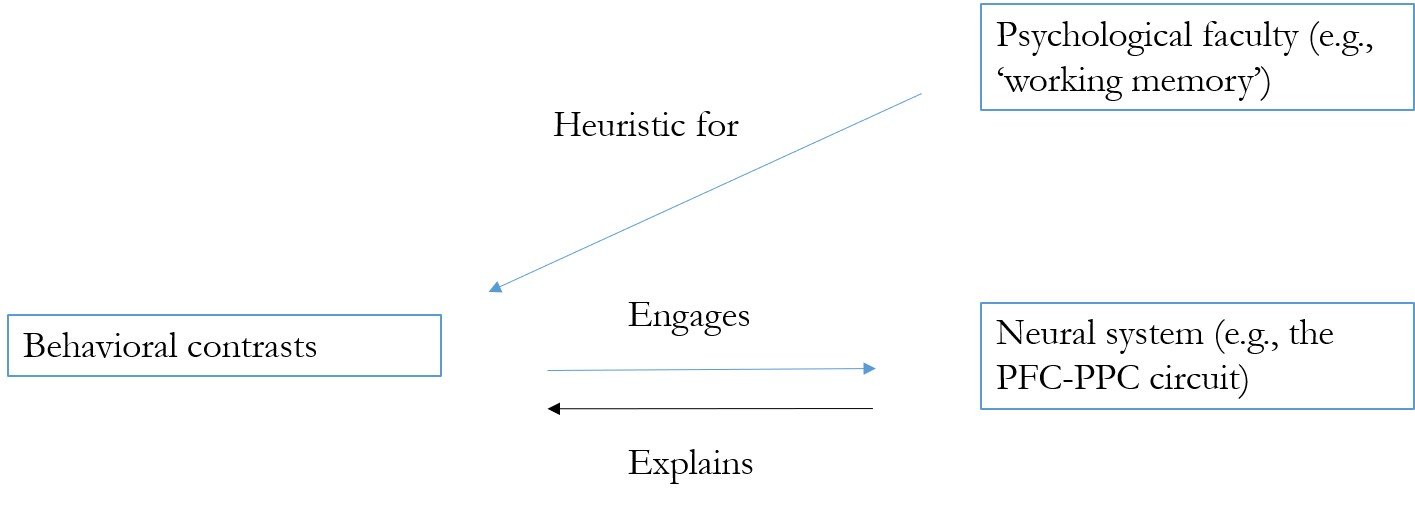

In response to this, I suggest that it is not our everyday cognitive ontology that is the problem, but instead the standard framework of cognitive neuroscientific explanation. I make the, I believe, radical proposal that posits of cognitive functions do not explain behavior. Instead, they provide heuristics for uncovering differences in behaviors that may make a difference to how the brain processes information in particular task contexts. Information processing in the brain then directly explains behavior, without requiring there to be a fact of the matter about what cognitive faculty, if any, is instantiated. The alternative explanatory framework is shown below.

The question I analyze in the chapter is whether databasing and brain mapping projects substantiate the standard framework and our intuitive list of cognitive functions, substantiate it by revising our cognitive ontology, or suggest abandoning the standard framework altogether. I argue for the latter. The problem with the first option is that the projects further establish, rather than overcome, the issues of multifunctionality and distribution. While the analyses have shown that one can correlate specific patterns of activity with putative cognitive function, they have not shown that each cognitive function has a distinct neural realizer; rather, it is specific patterns of multifunctional and distributed circuits that correlate with cognitive functions.

Why have neuroscientists felt the need to pursue these analyses? At least partially, it is because of concern about our cognitive ontology – what cognitive functions there are, how they interact with each other, and how and whether they are instantiated in the brain. In particular, do our intuitive categories of mental functions, such as “perception,” “emotion,” “memory,” and “attention” really map to distinct functional mechanisms in the brain? Or do we need to revise, or even abandon, these intuitive categories? Databasing and brain mapping projects are pitched as helping us to address these questions. In my chapter, I analyze their potential to do so.

Philosophy Department, Tulane University